The Gentoro Blog

MCP Weekly: Slack Goes Agent-Native, Google Database MCP, OpenAI Personal Agents

This week in MCP: Slack’s agent-native servers, Google’s Managed MCP for Spanner, Cloud SQL and more, Gemini 3.1 Pro and Deep Think, Claude Sonnet 4.6, Microsoft’s Security Dashboard for AI, plus new infra funding.

MCP Weekly: EU Challenges Meta, OpenAI Targets Enterprise, and Claude Opus 4.6 Arrives

This week’s MCP Weekly: EU probes Meta’s WhatsApp AI lockout, OpenAI ships Frontier and tests ChatGPT ads, Anthropic launches Claude Opus 4.6, plus hosted MCP.

From Moltbook to Real Work: Breaking the Agent Hype Cycle

The Moltbook fiasco exposed the AI agent hype cycle in real time. Here’s why real agent ROI depends on execution, security, and observability.

MCP Weekly: OpenAI x Snowflake, Codex on Mac, Xcode Goes Agentic

From Xcode to Snowflake, agents are going first-party. This week's digest breaks down Codex on Mac, GitHub Agent HQ, OpenAI’s $200M Snowflake partnership, and why Moltbook put agent security back in the spotlight.

MCP Weekly: Anthropic's Constitution, GitHub Copilot SDK, and OpenAI's $30B Bet

This MCP Weekly covers Anthropic’s new Claude constitution and MCP tool integrations, GitHub’s Copilot SDK technical preview, OpenAI’s Prism and Codex agent loop details, plus a fresh $30B SoftBank move around OpenAI.

MCP Weekly: OpenAI’s $250M BCI Bet, Azure MCP GA, Agentforce MCP

This week's digest: ChatGPT Go goes global, OpenAI taps Cerebras for 750MW inference, and MCP spreads across Azure Functions, Salesforce, AWS, and Google Cloud.

MCP Weekly: The Agentic Explosion Hits Commerce, Work, and Cloud

MCP Weekly kicks off 2026 with a look at the ways the agentic shift is reshaping AI, from UCP’s launch and MCP storefronts to desktop coworkers and the rise of execution-first infrastructure.

What is the Universal Commerce Protocol (UCP)?

Universal Commerce Protocol (UCP) is Google’s agentic checkout standard. Learn how it enables AI agents to transact across retailers without custom APIs.

MCP Inspector Explained: Debugging at the Protocol Layer

Learn how MCP Inspector works, why AI clients hide real MCP errors, and how to debug MCP servers by inspecting raw JSON-RPC traffic, schemas, auth, and lifecycle events directly.

The Agentic Shift: 2025’s Real Breakout for Agentic AI

A 2025 retrospective into the agentic shift: MCP and AAIF standards, massive AI infrastructure spend, enterprise agents in production, and the new rules shaping autonomous systems.

MCP Weekly: The Rise of the Agentic Web and National-Scale AI

This MCP Weekly covers Anthropic’s Genesis Mission with the U.S. Department of Energy, new Agent Skills and GPT-5.2 Codex advances, plus a critical Cursor MCP RCE that exposes rising agent security risks.

MCP Weekly: Copilot Goes Agentic, Security Reality Sets In, and MCP Reaches the UI Layer

This MCP Weekly tracks Copilot’s shift into agentic AI, the security reality of production agents, and MCP’s move from backend plumbing into the UI layer.

The Integration Hangover: Why Enterprise AI ROI Is So Hard to Prove

Enterprise AI struggles to deliver results when execution breaks across systems. A look at how fragmentation limits real enterprise outcomes.

MCP Weekly: Agentic AI Foundation, Cloud Momentum, and New Security Tools

This MCP Weekly covers MCP’s move to the Linux Foundation, rising support from AWS and Google Cloud, and new security focused MCP servers for production use.

Why We Built Gentoro OneMCP: A New Runtime Model for Agent–API Integration

Learn how a real customer challenge led us to create Gentoro OneMCP, an open-source runtime that replaces large MCP toolsets with a single, plan-driven interface.

MCP Weekly: Cloud Standardization, Security Platforms, and $200M Agent Investment

A major week for MCP as AWS unifies cloud architecture, security platforms emerge to protect agent actions, and Snowflake and Anthropic commit $200M to governed AI agents.

UTCP vs. MCP: Simplicity Isn’t a Substitute for Standards

We break down UTCP’s promises, its architectural pitfalls, and why MCP remains the most durable standard for secure, production-grade AI agents.

MCP Weekly: Ecosystem Maturity, Supply-Chain Risks, and Enterprise Adoption

This week: MCP turns one as OpenAI’s supply-chain breach underscores rising security demands and major vendors adopt MCP for payments, infrastructure, and long-running agents.

MCP Turns One: Tasks, Extensions, and Agent Infrastructure’s Next Phase

Anthropic’s latest MCP spec is here! No cake, but task workflows, extensions, and enterprise-grade auth. We break down what’s new, what matters most, and what’s coming next for agent infrastructure.

MCP Weekly: Enterprise Adoption, Agent Coordination, and Power BI’s Big Leap

This week: Power BI’s server debut, evolving agent frameworks, and expanding enterprise-grade security and tooling. Plus: lessons from the Cloudflare outage.

Browser vs. Workflow for Sales Automation: What Actually Works?

Trying to choose between Zapier, n8n, and browser-based frameworks for sales automation? We tested all three and added Gentoro to extend what workflows can do.

MCP Weekly: Security and Large-Scale Enterprise Integration

This week: Agent Sandbox, an MCP powered cyber espionage case, D365 ERP, Context Forge and new GPT 5.1 tools show MCP becoming core enterprise infrastructure.

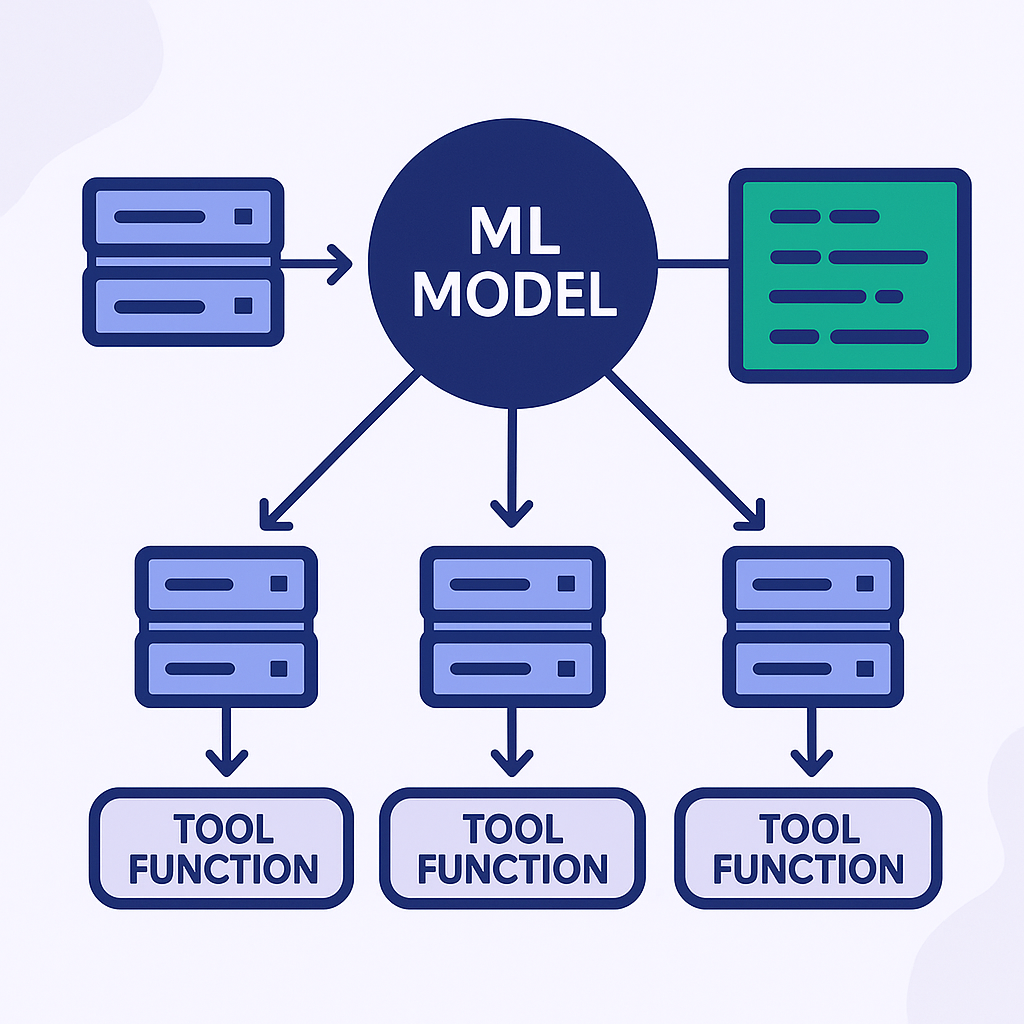

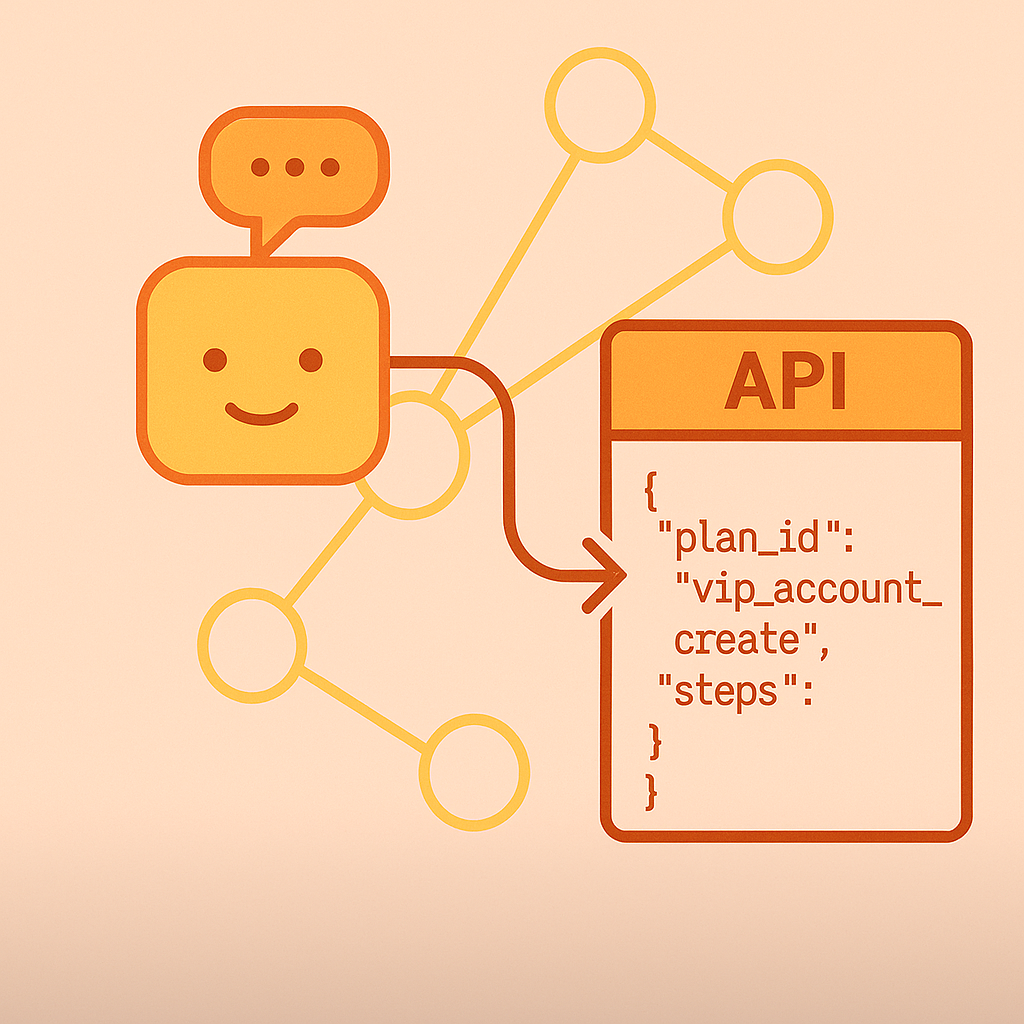

Tool Invocation via Code Generation—and Beyond

What if agents didn’t have to call tools directly? There's a new execution model for agent systems that separates planning from doing, reduces token cost, and makes interactions with APIs faster and more reliable.

Why Agents Must Think in Code: Lessons From Anthropic’s MCP Evolution

Anthropic’s latest blog highlights a major shift in AI agent design. Here’s what this means for the future of MCP-based workflows.

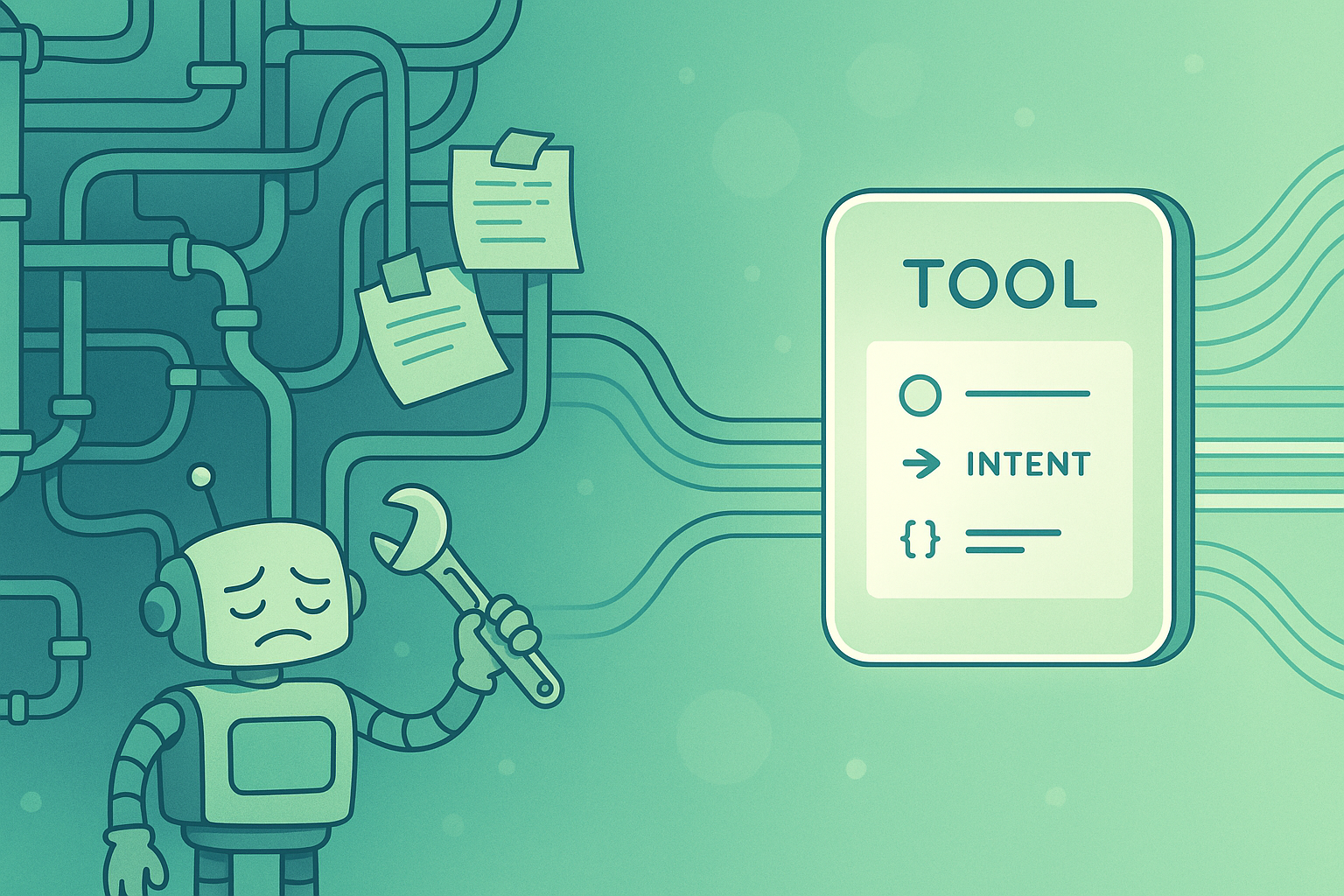

Agent Interfaces Are the New APIs

AI agents can call tools, but can they use them reliably? Explore how agent-native interfaces enable smarter, faster, and more scalable AI integration.

Did Anthropic Just Teach Agents to Remember? Inside Claude’s New Skills Feature

Anthropic’s new Skills system gives Claude reusable, modular abilities that live outside the prompt. Here’s what it means for developers, enterprises, and the next wave of agentic AI.

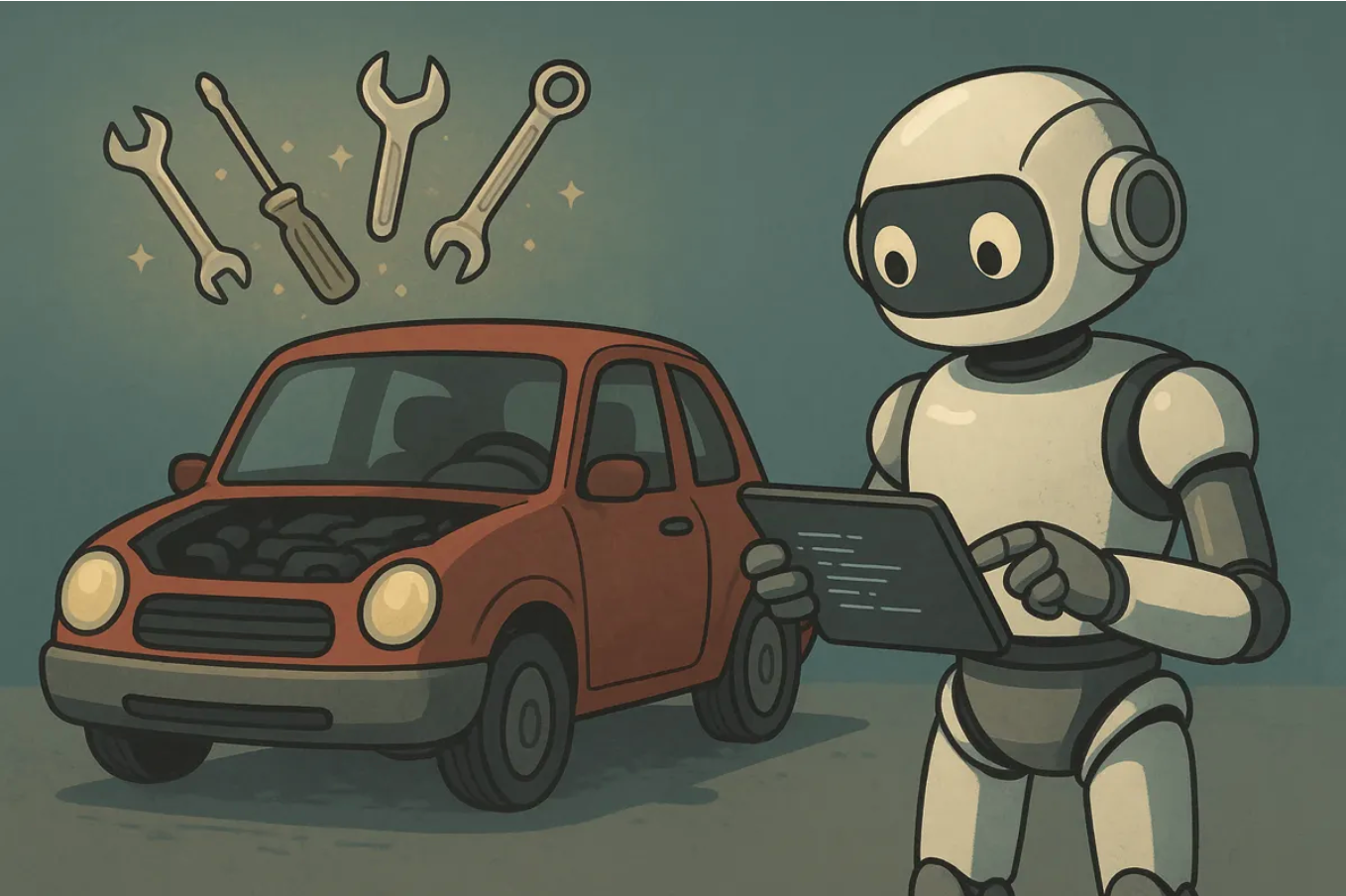

Why the Future of AI Integration Depends on Tooling Infrastructure

Wrapper and retries and patching - oh my! Discover why current AI integration methods don’t scale and how smarter tooling can fix it.

The Interface Gap: Why LLMs Still Struggle with OpenAPI

OpenAPI makes APIs machine-readable, but not agent-usable. Learn why LLMs struggle with traditional specs and how a semantic interface layer like MCP helps.

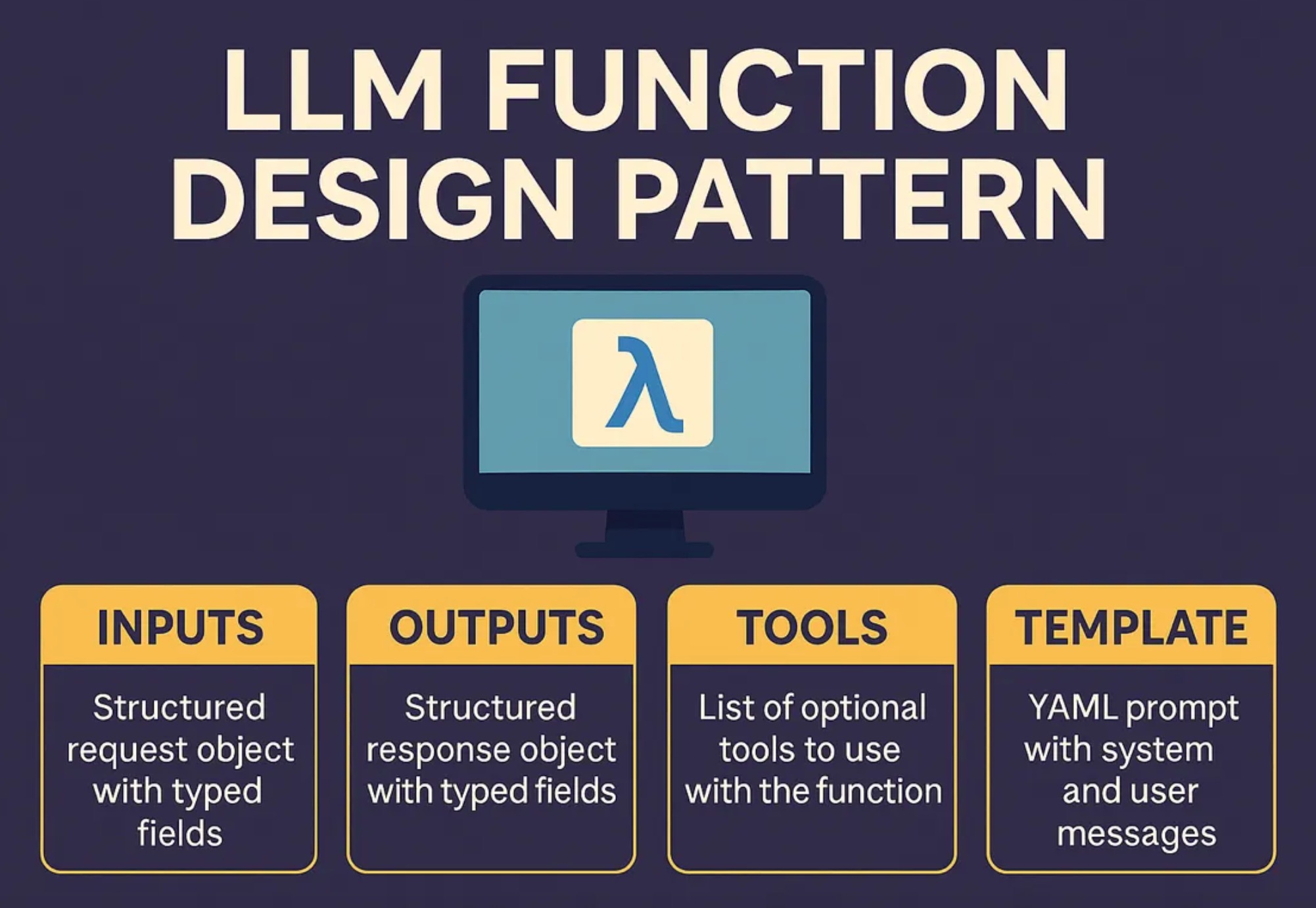

The LLM Function Design Pattern

Learn how the LLM Function Design Pattern reduces fragility in AI apps by consolidating prompts, inputs, outputs, and tools into a single structured unit.

Agents vs. Tools Is Over: MCP Elicitations Changed the Game

Agents vs. tools? With MCP elicitations, it’s no longer a real distinction. See how this shift changes how developers build AI workflows.

What Is Anthropic’s New MCP Registry? A Guide for Developers & Enterprises

What exactly is Anthropic’s new MCP Registry? How does it differ from npm-style repos? And what does it mean for existing registries and developers? Find out here.

From OpenAPI Specs to MCP Tools: Gentoro’s Agent-Aligned Advantage for Enterprises

Generate secure, enterprise-ready MCP Tools from OpenAPI specs. Discover why Gentoro’s agent-aligned platform outperforms SDK-based generators for enterprise AI.

Top App: Emergent vs Lovable in the Same No-Code Kitchen

A hands-on look at AI no-code platforms, comparing speed, flexibility, and real-world usability when building a live restaurant booking app.

Orchestrating Real-world Agentic Workflows

How Gentoro built a custom MCP Tool that connects Slack, Hubspot, and Notion to automate workflows for a global musician community with Agentic AI.

MCP Security Essentials: Protecting Servers and Tools at Scale

What are MCP security essentials? Learn MCP server security best practices for authentication, authorization, and compliance with Gentoro.

Why MCP Is Essential for Agentic AI

Discover how MCP bridges the gap between traditional APIs and reasoning agents by providing semantic context, structured outputs, and more.

How to Integrate OpenTable With AI Agents Using a Custom OpenAPI Spec

Gentoro enables seamless AI integration with OpenTable, even without a public OpenAPI spec. Build MCP Tools from custom YAML in minutes.

Why MCP Needs an Orchestrated Middleware Layer

MCP needs an orchestrated middleware layer to work reliably. Learn how Gentoro helps scale agentic workflows with model-aware, reusable runtime infrastructure.

Why Do MCP Tools Behave Differently Across LLM Models?

Why do MCP Tools behave differently across LLM models? Learn how model assumptions impact tool usage, and explore practical strategies to improve reliability.

Why Traditional Regression Testing Doesn’t Work for MCP Tools

Traditional regression testing fails for modern AI systems powered by MCP. Learn how to rethink testing for non-deterministic LLM-driven workflows.

Why Enterprise Systems Aren’t Ready for AI Agents (Yet)

Enterprise systems aren’t ready for AI agents. From integration bottlenecks to outdated infrastructure, here's why adoption stalls and how Gentoro can help.

From Connection Chaos to Intelligent Integration

Gentoro is now GA! Use Gentoro to go from jury-rigging together APIs to vibe-coding production-ready, fully usable MCP Tools.

Building MCP Tools: From Protocol to Production

Building MCP Tools for AI agents isn’t easy. Learn how Gentoro's vibe-based approach simplifies MCP Tool generation.

Connecting Agents to the Enterprise With MCP Tools

Most APIs aren’t built for AI agents. Learn how MCP Tools provide a secure, intent-based interface that bridges LLMs and enterprise systems.

How MCP Tools Bridge the Gap Between AI Agents and APIs

Most APIs aren’t built for AI agents. Learn how MCP Tools provide a secure, intent-based interface that bridges LLMs and enterprise systems.

How MCP Leverages OAuth 2.1 and RFC 9728 for Authorization

Learn how the Model Context Protocol (MCP) adopts OAuth 2.1 and RFC 9728 to enable dynamic, secure authorization for AI agents and agentic tools.

Turn Your OpenAPI Specs Into MCP Tools—Instantly

Introducing a powerful new feature in Gentoro that lets you automatically generate MCP Tools from any OpenAPI spec—no integration code required.

Navigating the Expanding Landscape of AI Applications

Learn how developers are navigating the complex landscape of LLM apps, AI agents, and hybrid architectures — and how protocols like MCP and A2A are shaping the future of AI integration.

Deploying a Production Support AI Agent With LangChain and Gentoro

Deploy an AI agent using LangChain and Gentoro to automate incident detection, analysis, team alerts, and JIRA ticket creation, enhancing production support efficiency.

Announcing: Native Support for LangChain

Build production-ready AI agents faster with Gentoro’s native LangChain support—no more glue code, flaky APIs, or auth headaches.

What Are Agentic AI Tools?

Agentic tools let AI act beyond text generation, using inferred invocation to interact with real-world applications. Learn how MCP simplifies AI-tool connections.

LangChain: From Chains to Threads

LangChain made AI development easier, but as applications evolve, its limitations are showing. Explore what’s next for AI frameworks beyond chain-based models.

Vibe Coding: The New Way We Create and Interact With Technology

Vibe coding, powered by generative AI, is redefining software creation and interaction. Learn how this paradigm shift is transforming development and user experience.

Rethinking LangChain in the Agentic AI World

LangChain is powerful but manually intensive. What if agentic AI handled the complexity? Explore how directive programming could redefine AI development.

Introducing Model Context Protocol (MCP) Support for Gentoro

Discover how Gentoro’s support for Model Context Protocol (MCP) simplifies AI tool integration, enabling smarter workflows with Claude Desktop and more.

LLM Function-Calling vs. Model Context Protocol (MCP)

Explore how function-calling and MCP revolutionize enterprise workflows by simplifying LLM usage and showcasing their unique roles in development.

Using MCP Server to Integrate LLMs Into Your Systems

Learn how MCP servers streamline enterprise LLM development, overcome framework hurdles, and power scalable, efficient generative AI applications with ease.

LLM Function-Calling Performance: API- vs User-Aligned

Discover how API-aligned and user-aligned function designs influence LLM performance, optimizing outcomes in function-calling tasks.

Building Bridges: Connecting LLMs with Enterprise Systems

Uncover the technical hurdles developers encounter when connecting LLMs with enterprise systems. From API design to security, this blog addresses it all.

Contextual Function-Calling: Reducing Hidden Costs in LLM Function-Calling Systems

Understand the hidden token costs of OpenAI's function-calling feature and how Contextual Function-Calling can reduce expenses in LLM applications.

Simplifying Data Extraction with OpenAI JSON Mode and Schemas

Discover how to tackle LLM output formatting challenges with JSON mode and DTOs, ensuring more reliable ChatGPT responses for application development.

Why Function-Calling GenAI Must Be Built by AI, Not Manually Coded

Learn why AI should build function-calling systems dynamically instead of manual coding, and how to future-proof these systems against LLM updates and changes.

User-Aligned Functions to Improve LLM-to-API Function-Calling Accuracy

Explore function-calling in LLMs, its challenges in API integration, and how User-Aligned Functions can bridge the gap between user requests and system APIs.

Function-based RAG: Extending LLMs Beyond Static Knowledge Bases

Learn how Retrieval-Augmented Generation (RAG) enhances LLMs by connecting them to external data sources, enabling real-time data access and improved responses.

Customized Plans for Real Enterprise Needs

Gentoro makes it easier to operationalize AI across your enterprise. Get in touch to explore deployment options, scale requirements, and the right pricing model for your team.

Read the Latest

News & Articles

Gentoro Announces OneMCP, an Open-Source Layer for Accurate API Calls by AI Agents

Gentoro has launched the open-source prototype of OneMCP, a knowledge-driven runtime for the Model Context Protocol (MCP) that enables AI agents to interact with backend APIs accurately, consistently, and at lower cost.

Gentoro at Tomorrow Street Scaleup X Programme-Silicon Luxembourg

Gentoro is one of twenty emerging companies to participate in Tomorrow Street's Scaleup X Programme.