UTCP vs. MCP: Simplicity Isn’t a Substitute for Standards

As an AI developer, you’ve almost certainly worked or experimented with the Model Context Protocol (MCP) by now. MCP just turned one, and the adoption curve is basically vertical. Just about everyone is exploring MCP: Google, Microsoft, Amazon, enterprise platforms, indie tool builders, and maybe even my grandma (not really).

Right in the middle of those anniversary streamers and balloons, I stumbled across a new contender: UTCP, the “Universal Tool Calling Protocol.”

On paper, it sounds awesome: a lightweight, "direct" alternative to MCP that promises to remove the middleman. Naturally, I dove into the documentation to see if we were looking at the next big thing in AI agent standards. Was UTCP going to be the fresh-coded challenger that makes MCP look old overnight?

After reading the spec and thinking through real-world deployments, my answer is: not yet. In this post, I’ll walk through what UTCP gets right, where it falls short, and why I’m not ready to throw away MCP (or its wrappers) anytime soon.

UTCP’s Big Promise: Direct Tool Calling Without MCP’s Mediation

To understand why UTCP is making waves, you have to look at the one thing it removes: the server.

UTCP positions itself as a modern, lightweight open standard that lets AI agents directly discover and call existing tools and APIs (like HTTP, CLI, or GraphQL) without needing wrapper servers. So basically, instead of standing up an MCP server to expose a tool, you publish a standardized JSON “manual” at a known endpoint. The agent grabs that manual, learns what your tool can do, and then calls your tool directly over its native interface..

According to the official documentation, this flips the traditional integration model on its head. Instead of paying the "wrapper tax," you simply define your tool’s capabilities in a static file. This ensures zero latency overhead via direct tool calls and easy integration through a single endpoint without infrastructure changes. It supports protocol flexibility (spanning HTTP, MCP, CLI, and GraphQL) and remains scalable by utilizing your current monitoring tools. Crucially, it maintains native security by leveraging your existing authentication, promising a frictionless path to making your entire software ecosystem agent-ready without deploying a single new container.

It’s an elegant pitch: your tools stay exactly as they are, your infrastructure doesn't change, and suddenly everything becomes agent-ready. From a developer’s perspective, it feels like the protocol equivalent of: “What if we just didn’t add all that extra stuff?”

I get the appeal. But it’s easy enough to make promises. Is this thing actually going to work in production?

UTCP vs. MCP: Behind the Scenes

To truly weigh the trade-offs, we must look at the distinct architectural paths these protocols take to bridge the gap between AI models and your infrastructure.

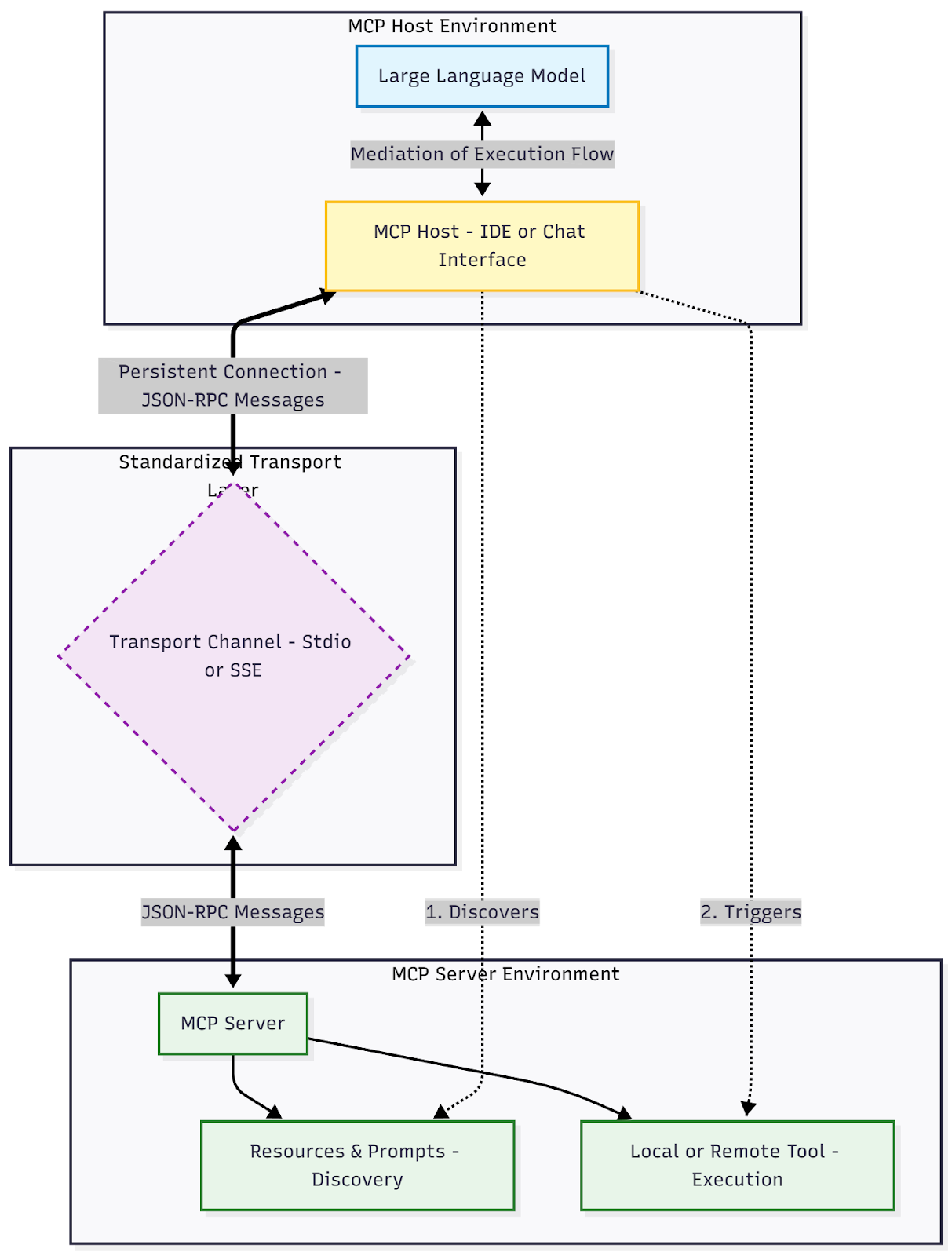

Figure 1 : MCP Architecture: Host, Transport and Service Interaction Flow

How MCP Works: MCP operates on a strict Client-Host-Server architecture. An MCP Host (like an IDE or Chat interface) connects to an MCP Server via a standardized transport layer (typically Stdio or SSE). Through JSON-RPC message passing, the host creates a persistent connection to discover resources and prompts, actively mediating every execution flow between the LLM and the local or remote tool.

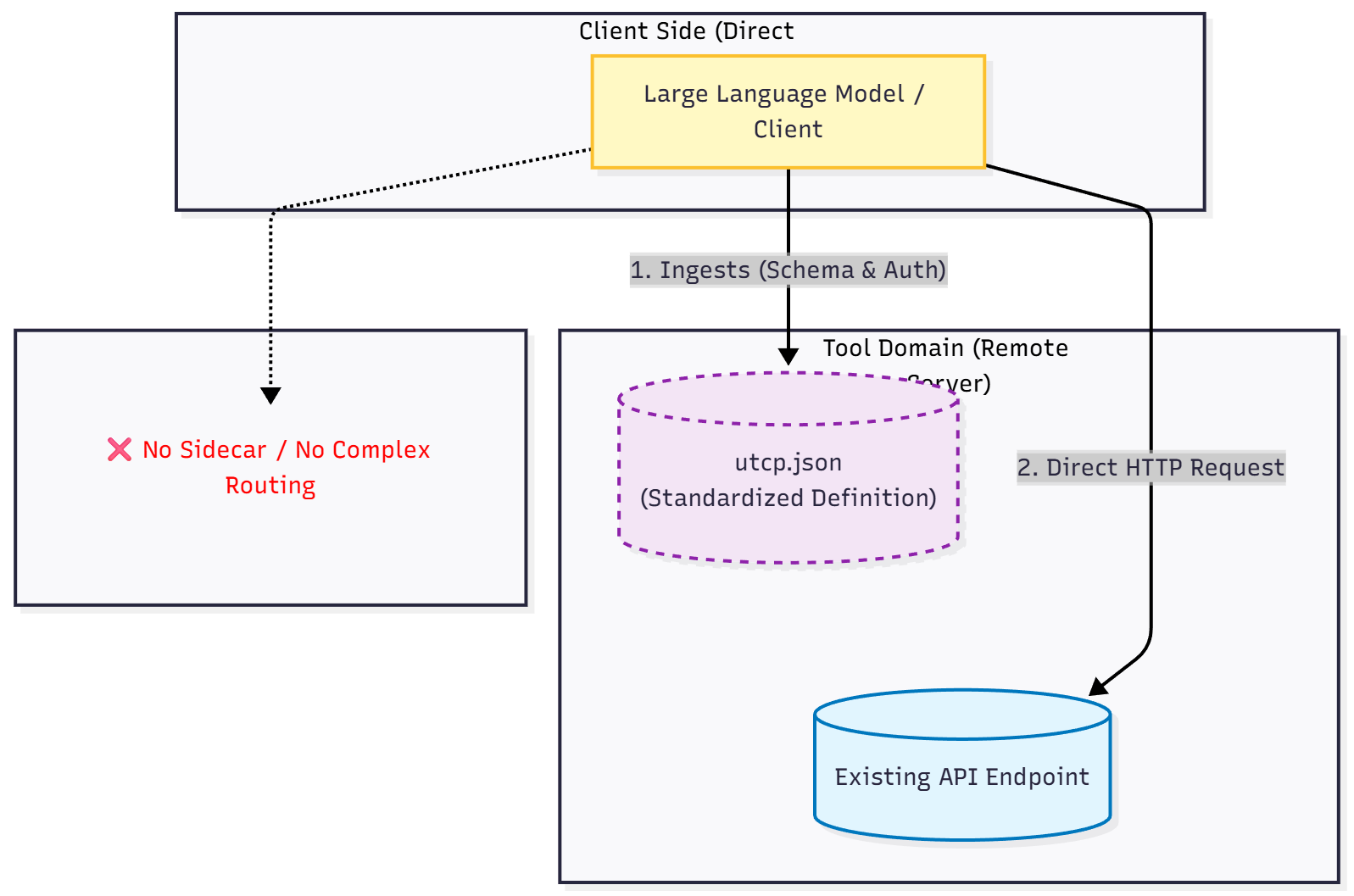

Figure 2 : UTCP Architecture: Direct Client-Side Tool Execution Flow

How UTCP Works: UTCP removes the active intermediary entirely by relying on a standardized definition file (often utcp.json) hosted directly on the tool's domain. The LLM ingests this "instruction manual" to understand the API's schema, authentication methods, and capabilities upfront. When a task is triggered, the model constructs a direct HTTP request to the existing endpoint, bypassing the need for a dedicated sidecar server or complex message routing.

The Hidden Risks Behind UTCP’s “Direct Access” Model

UTCP’s promise of cutting out the middleman sounds great until you look at the operational edge cases. Direct access brings direct fragility, and the tradeoffs surface quickly once you step outside the path of least resistance.

1. The Mandatory Manual Problem

UTCP requires the agent to fetch a “manual” from a dedicated endpoint before doing anything. This is similar to an HTTP OPTIONS call, but with a critical difference: OPTIONS is optional. The UTCP manual fetch is mandatory. So if that endpoint is down, your agents are bricked.

You end up making a request just to figure out how to make the real request. To me, that feels like a step backward in interaction design.

2. The Versioning "Race Condition"

My biggest concern with UTCP is consistency. How do you guarantee that the "manual" (the description) and the "tool" (the execution) are perfectly in sync?

Consider a scenario that is quite possible at scale:

- The Agent fetches the Manual.

- The Tool deploys a breaking change.

- The Agent calls the Tool based on the (now outdated) Manual.

- Congratulations! You’ve just built a race condition into your integration layer.

MCP sidesteps this entirely by mediating calls through a host that enforces a stable, versioned contract. UTCP pushes that responsibility back onto developers and deployment strategies (blue/green, canary, multi-region), all of which can drift subtly out of sync.

3. Standards, Semantics, and the Cost of “Just Use Your API”

The core principle of UTCP is maintaining the semantics of your original tool interactions without changing anything for the agent. "Don't change your code" does seem super enticing, like that third helping of pumpkin pie at Thanksgiving (I’m still trying to work off all those calories!). But it skips over the entire value of having a standard.

Agents need predictable structures for errors, permissions, retries, safety policies, and rate limits. I prefer one standard interaction pattern (MCP) over proliferating distinct paradigms for communication, error handling, and security across my agents. Furthermore, I often want different rules for my agents than for my human users. With a dedicated MCP layer, I can enforce stricter rate limits and distinct permissions for agents, something UTCP’s "direct access" model makes much harder to implement cleanly.

Final Thoughts: MCP Isn’t Winning by Accident

For sure, UTCP has some clever ideas. It tries to meet developers where they are, using the endpoints they already have. Developer convenience is a great on-ramp, but it’s not a foundation for a standard. Standards survive because they enforce shared rules, shared semantics, and shared expectations, even when that requires more structure than people want at first.

MCP is winning because it provides a predictable contract. It decouples the what (the tool) from the how (the execution). UTCP blurs that line, reintroducing the very coupling we spent years trying to eliminate with microservices.

And yes, UTCP lands a few good punches on deployment simplicity. But knocking out a standard takes more than a lightweight spec. Right now, UTCP is an interesting experiment in direct access. MCP is the protocol that actually scales, governs, and survives contact with enterprise reality. As they say, it rolls with the punches.

Customized Plans for Real Enterprise Needs

Gentoro makes it easier to operationalize AI across your enterprise. Get in touch to explore deployment options, scale requirements, and the right pricing model for your team.