Why We Built Gentoro OneMCP: A New Runtime Model for Agent–API Integration

Gentoro OneMCP was built differently from the start. It actually began as a customer project! While helping a team build an analytics system that required an AI agent to interact with their different APIs, we realized that the typical MCP approach – using a single OpenAPI spec to generate and expose dozens of tools – was not viable at scale (if it would scale at all).

How it Started: A Messy but High-Quality API

In theory, our customer had high quality API materials and all of the information needed for an AI agent to do its job. The reality was more complex, with the necessary components spread across multiple OpenAPI fragments, various internal wikis and internal documents, and code annotations throughout. Everything was there, but it was far from a single, unified spec.

At the time, our approach revolved around the ingestion of a self-contained OpenAPI specification, so the starting point seemed obvious: assemble our customer’s data and generate the spec required for an AI agent to function in the way they needed. But, as many have learned, LLM-based agents are highly sensitive to the quality of tool descriptions, and even slight variations in the phrasing of endpoints or parameters can change an agent’s behavior.

And so we found ourselves in an unrelenting cycle of continuous refinement: adjusting descriptions, renaming fields, tweaking operation summaries, and then needing to regenerate the entire specification from scratch. Lather, rinse, repeat. It was slow, repetitive, and extremely time consuming.

Why MCP Tool Generation Doesn’t Scale in Real Projects (as We Discovered)

Even when we had a solid spec in hand, the MCP tool generation process itself would slow us down because it necessitated both new tool descriptions and rebuilt implementations every time. And every new tool description or alteration to the phrasing meant regenerating a new spec and all the relevant tools.

Every experiment we tried, no matter how small, turned out to be a tedious and expensive endeavour, which limited how quickly we could explore improvements. Rather than a single bottleneck, every aspect of our work felt like it had to happen in slow motion.

How Tool Bloat Increases Token Cost and Reduces Agent Accuracy

The analytics use case was dataset driven. And because each dataset supported its own set of operations (including queries, filters, aggregations, exports, etc.), it felt logical to model each dataset as its own MCP tool, each tailored to its specific operations.

We built several this way, and they worked reasonably well at a small scale. However, it was hard enough as it was for the agent to interpret one tool’s description correctly. Add too many, and it started losing track of which tool handled which asset. Meanwhile, although we didn’t hit hard context limits for the model we used, the combined size of the tool descriptions was massive, approaching half a megabyte. Once we surpassed a dozen tools, the model became overwhelmed and began to struggle.

Yet we still had roughly 100 datasets to support. Even ideas such as sharding or routing would not have solved our fundamental scalability problem; it was becoming clear that the “many tools” model was breaking down.

Why MCP Needed a New Model for Large, Complex APIs

There had to be a better way; the success of our customer’s project, the future of our business, and our own sanity depended on finding it. Surely, there must be a way to make this workflow more granular and less dependent on full rebuilds.

During testing, we noticed a pattern: the model often performed better when some of the contextual material was supplied as part of the prompt rather than embedded deep inside the tool descriptions.

The realization that placement and structure mattered as much as content was enlightening, and it made us question the conventional wisdom that “everything lives in the spec.”

One Tool to Rule Them All? Rethinking Agent–API Integration

We stepped back and asked ourselves a simple question: Why should an agent have to reason across 100 tools when, conceptually, it’s all one API?

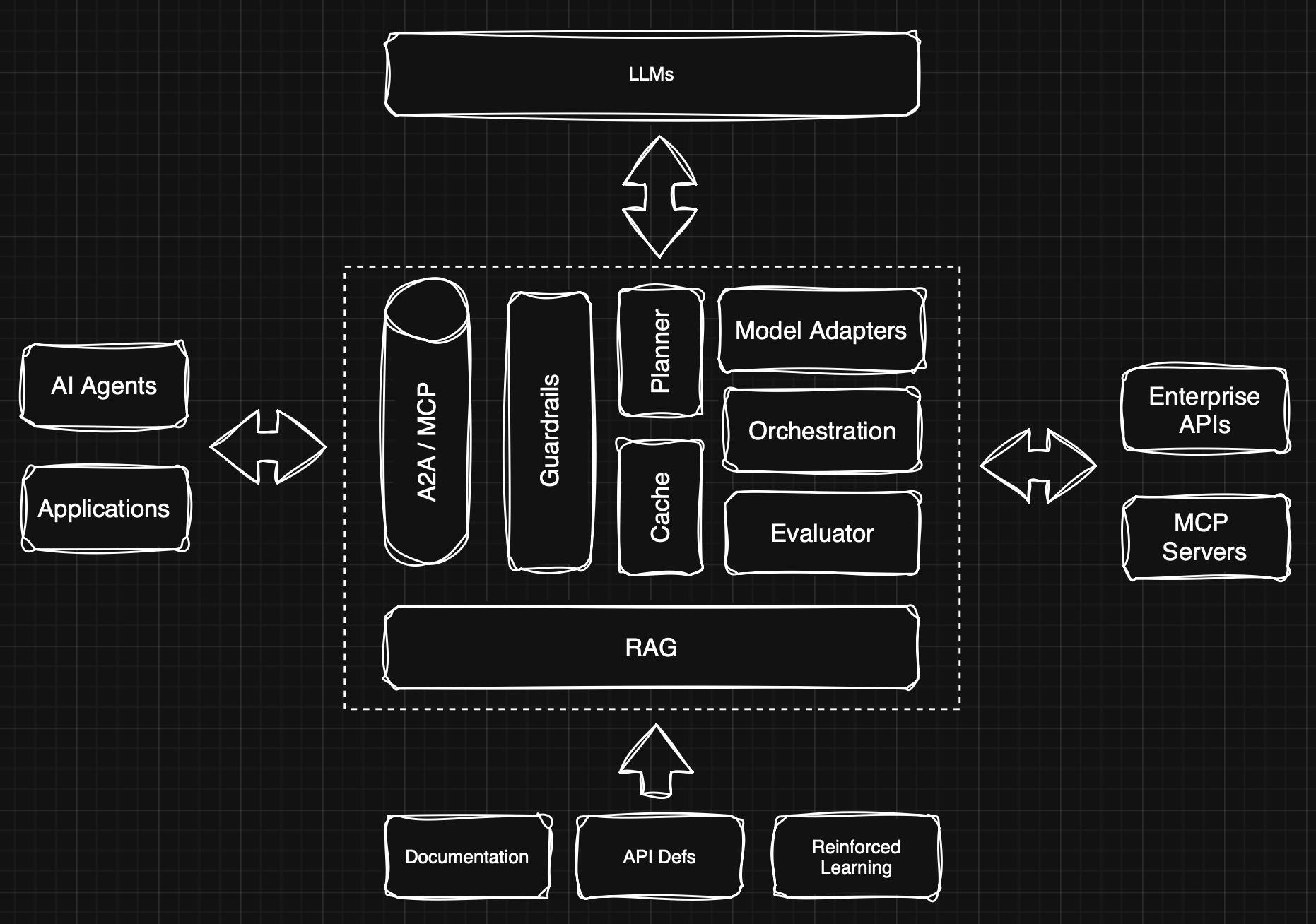

That was the turning point. We realized that the agent did not need a collection of tools; what it really needed was a single interface capable of interpreting intent and executing the corresponding API calls internally.

And that realization led to the genesis of Gentoro OneMCP: Instead of generating MCP tools, we needed to generate execution plans – the structured mappings between natural-language prompts and specific API actions.

Execution plans describe which endpoints to call, how to extract parameters, and how to combine results, without exposing dozens of separate tools to the agent. With OneMCP, the agent interacts with a single natural-language interface – the “one” in OneMCP – while the runtime manages all the complexity underneath.

How Execution-Plan Caching Improves Performance and Predictability

Even after we realized that execution plans would resolve many of the problems we had faced, we had another challenge to overcome: Latency. Converting a new prompt into an execution plan improves accuracy; however, because it requires reasoning, it’s slower than direct invocation.

Introducing caching was a game changer. Execution plans are naturally cacheable, because APIs, unlike open-ended chat interfaces, have a finite set of possible intents. Once an execution plan exists for a given request pattern, it can safely be reused.

This insight became the foundation of OneMCP’s plan-based caching model:

- Preloaded Mode: Execution plans are generated ahead of time from the API spec and stored for immediate use in production.

- Dynamic Mode: Execution plans are generated on demand for new prompts, then cached for reuse in the future.

With OneMCP’s caching model, if the API changes, OneMCP will detect the mismatches and automatically invalidate outdated plans, regenerating only what is needed.

What Our New Runtime Model Means for MCP and Agentic Systems

What started as a stubborn integration project became a broader reframing of how AI agents should use APIs in the first place. The real breakthrough was recognizing that the traditional MCP model forced agents to operate at the wrong altitude: too many tools, too much surface area, too much re-reasoning.

To address these issues, Gentoro OneMCP:

- Removes the need to generate and maintain sprawling MCP tool inventories

- Exposes one unified, natural-language interface for any API

- Generates deterministic execution plans that are cached, reused, and continuously validated

- Keeps those plans in sync with changing specs, without manual rebuilds or prompt surgery

The result is a new model for API integration: fast, predictable, and dramatically cheaper to operate. Instead of teaching agents to sift through dozens of tool descriptions, we let them speak naturally while the runtime handles the choreography beneath the surface.

We’re sharing Gentoro OneMCP as an open-source runtime because this shift belongs to more than one company or one customer problem. We’d love to collaborate with the developers, researchers, and teams shaping the next generation of agent systems. If you’re experimenting with MCP, building agentic workflows, or exploring new integration patterns, we invite you to join us and help push this model forward.

Check out the repo here: https://github.com/Gentoro-OneMCP/onemcp

Customized Plans for Real Enterprise Needs

Gentoro makes it easier to operationalize AI across your enterprise. Get in touch to explore deployment options, scale requirements, and the right pricing model for your team.