Why Agents Must Think in Code: Lessons From Anthropic’s MCP Evolution

Anthropic’s latest article on code execution with the Model Context Protocol (MCP) lands at a pivotal moment in the evolution of agentic AI. It articulates a challenge every serious agent developer has run into: once your agent connects to more than a handful of tools, everything slows down. Token costs rise, latency balloons, and the clean lines of reasoning that once looked elegant turn into tangled loops of tool calls and intermediate data.

At Gentoro, we’ve been exploring these same issues for months, so this post really validates the direction we’ve been taking. And as we get ready to announce something new that builds directly on these ideas, we wanted to unpack why this shift matters so much.

The Hidden Costs of Connecting Agents to Too Many Tools

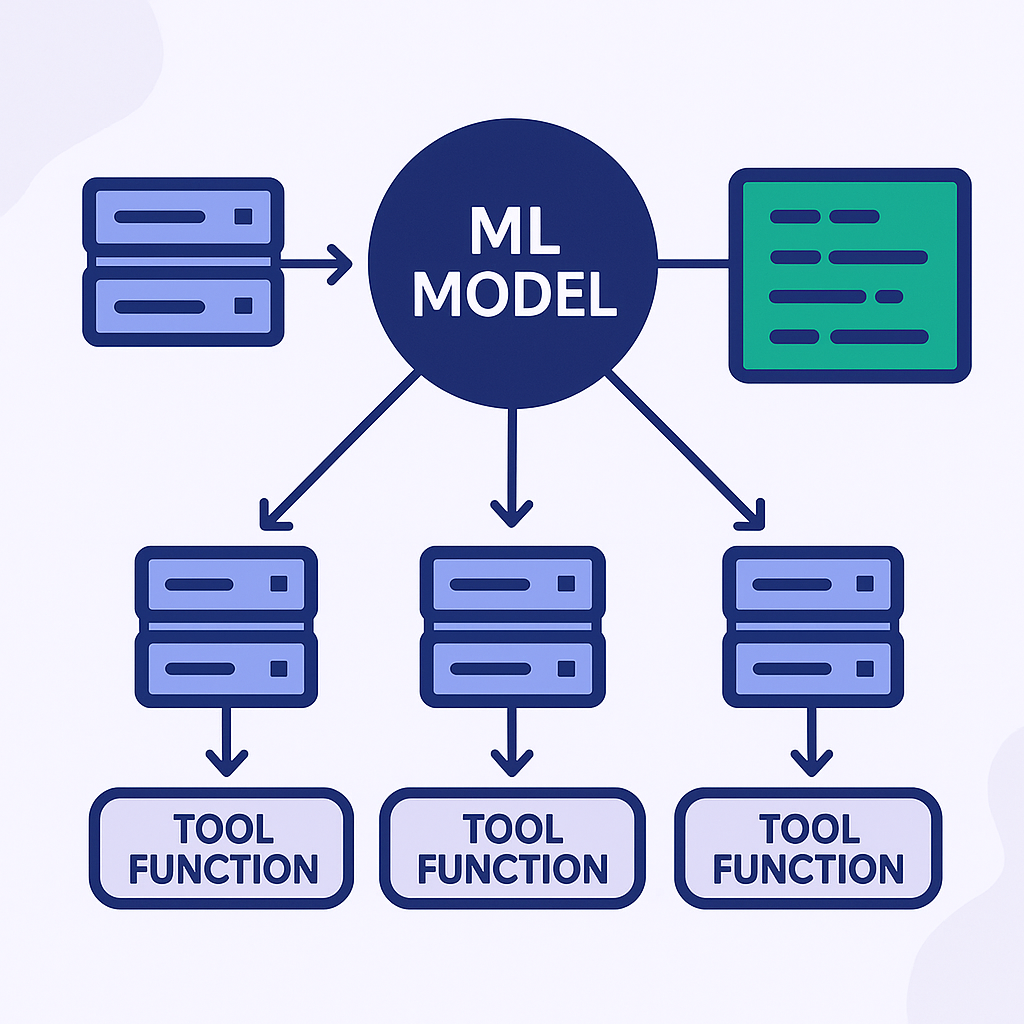

Anthropic identified a core problem. As AI agents become more capable, developers are connecting them to more tools (databases, CRMs, cloud drives, internal APIs, and more). And that makes perfect sense: the more tools your agent can use, the more useful it becomes.

Unfortunately, in practice, this plethora of tool integrations causes a sharp rise in cost, latency, and unreliability.

Anthropic highlights two major bottlenecks:

1. Tool definitions bloat the context window.

To make tools available, most agent frameworks load their definitions (including descriptions, parameters, and schemas) directly into the model’s context window. With just a few tools, that’s manageable. But when you have hundreds of tools from multiple MCP servers, you’re forcing the model to wade through hundreds of thousands of tokens before it can even begin solving the task. This slows response times and drives up token costs dramatically.

2. Intermediate results overload the model.

When agents pass data from one tool to another (say, a transcript from Google Drive into Salesforce), they often route the full result through the model context. That means the model processes the entire dataset multiple times. For long documents or large spreadsheets, this can eat up your entire context window or crash the flow altogether.

And this is the kind of problem that blows up exponentially when you’re trying to move your AI integration into a production environment.

The Breakthrough: Let Agents Write Code, Not Just Prompts

Here’s where Anthropic’s blog gets really interesting. The shift Anthropic proposes is going from tool calls to code execution. That means that instead of having agents call tools directly through a prompt (and forcing the model to carry every detail in its head), they propose something far more efficient: letting the agent write and execute code that calls tools as needed.

It may seem like a small change in interface, but it’s actually a huge change in architecture. Rather than dumping every tool definition into the context window and hoping the model picks the right one, the agent can now:

- Explore tools programmatically (like navigating a filesystem or calling a search function)

- Load only what it needs in the moment

- Transform, filter, and combine data using code as opposed to just prompts

- Handle loops, retries, and edge cases like a developer would: with control flow, not guesswork

Anthropic walks through a clear example: downloading a transcript from Google Drive and updating a Salesforce record. In the old model, that workflow bloated the context window by duplicating the full transcript across multiple steps. In the new model, the agent reads the transcript once, processes it in code, and only sends the result it actually needs, cutting token usage by over 98%.

This is a massive shift in how we think about what agents are. It moves them from fragile, stateless prompt processors to something much more familiar and more powerful: Agents as developers. Agents that orchestrate logic. Agents that reason in code.

From Prompt Engineering to Agent Architecture

This move from direct tool calls to code execution goes beyond a clever workaround for token limits. It’s a structural shift that touches everything from cost and latency to security, reuse, and trust.

Here’s why it matters:

It’s cheaper and faster

Running everything through the model burns tokens, whether it’s reading tool definitions, processing giant datasets, or re-evaluating logic in a prompt loop. Code execution externalizes that load. Instead of spending 150,000 tokens orchestrating a multi-tool workflow, you might spend 2,000. Multiply that by thousands of users or automated tasks per day, and the ROI becomes obvious.

It’s more reliable

When your agent writes code, it’s easier to test, easier to debug, and less likely to hallucinate. You get actual error messages, not just "the model didn't respond the way we hoped." You can persist logic, validate output, and retry on failure.

It’s more secure

In code execution environments, sensitive data doesn’t need to pass through the model unless you explicitly send it. You can tokenize or suppress anything private (emails, phone numbers, full transcripts, etc.) and still complete the workflow end-to-end. That’s a huge win for privacy-conscious teams working with real customer data.

It’s more extensible

Once an agent writes working code, it can reuse it, modularize logic into skills, or persist state across sessions. That’s when you stop thinking about agents as prompt responders and start thinking of them as autonomous operators that can build up a toolbox of repeatable behaviors over time.

At a higher level, this shift is about cost, performance, and determinism. When agents rely solely on prompting, their behavior is often opaque: each decision gets reinvented on the fly, shaped by context quirks and model temperature. That does not scale for business-critical workflows.

Code execution changes that. It gives agents a stable, inspectable foundation: the ability to reason explicitly, to follow logic that’s both traceable and testable, to act in ways that developers (and security teams) can actually understand.

In other words, this is how we move from "watching what the model does" to knowing what the agent will do. Once that foundation is in place, everything else (e.g., performance, privacy, reusability) gets easier to build on.

What’s Next After MCP Adoption

Anthropic’s piece should be required reading for anyone designing serious agent workflows. It captures a truth that’s becoming obvious across the ecosystem. The next generation of AI agents will go beyond prompts into programming. And the infrastructure that supports them (protocols like MCP, secure runtimes, and smarter caching layers) will determine who scales efficiently and who drowns in token costs.

For many teams, adopting the Model Context Protocol has already been a game-changer. It turns a chaotic sprawl of tool-specific integrations into something consistent, modular, and sharable. It’s a real protocol layer for AI-native workflows.

But as Anthropic’s post highlights, protocols alone aren’t enough once your system scales. MCP solves connectivity, but what happens next is about orchestration, efficiency, and long-term maintainability. This shift won't happen all at once. But we're already seeing signs:

- Teams grappling with runaway context sizes and tool sprawl

- Developers hand-rolling fragile prompt glue just to make basic sequences work

- Demand for more determinism, auditability, and efficiency, especially in enterprise settings

What does this mean for building smarter, more scalable agent systems? It means rethinking agent-tool interaction as something programmable, composable, and built to last. We’ll have more to share soon about how we’re addressing this shift at Gentoro. Stay tuned!

Customized Plans for Real Enterprise Needs

Gentoro makes it easier to operationalize AI across your enterprise. Get in touch to explore deployment options, scale requirements, and the right pricing model for your team.